Data Intellect

LangChain is a framework for developing applications powered by Large Language Models (LLMs) such as OpenAI GPT. Once trained with data supplied by the user in the form of text or PDF files with the LangChain Python API, the resulting LLM can be queried, including in a conversational manner. We found that a GTP LLM model trained with the raw text of a Data Intellect blog answered almost perfectly to the questions that it was asked. Further experiments are studies to assess the possibilities and limits of this technology.

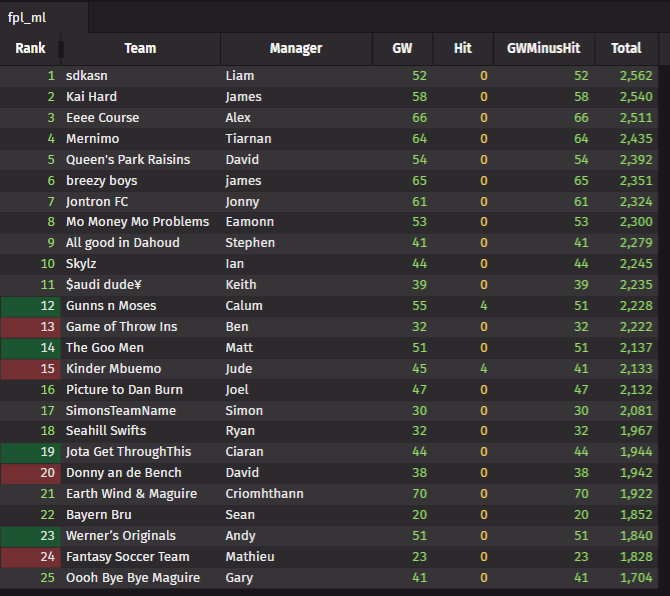

Methodology

Python source code

import os

import openai

import langchain

from langchain.indexes import VectorstoreIndexCreator

# Loads the OpenAPI private key, See https://platform.openai.com/account/api-keys

with open("./openai_api-key.txt") as oakf: # Sets the OPENAI_API_KEY env variable for the OpenAI API runtime

os.environ["OPENAI_API_KEY"] = oakf.read()

# Loads the text of my previous blog

loader = langchain.document_loaders.TextLoader("./article_on_software_safety.txt")

# Trains the LLM from the text

index = VectorstoreIndexCreator().from_loaders([loader])

# Model interrogation

print(index.query("What is software safety?"))

print(index.query("What are the key points made in this article?"))

print(index.query("What is the publication date, author and company who published this article?"))

Results

The answers produced by the model are very accurate (barring the fact it was not able to guess who I am).

-

> Query: What is software safety?

Software safety is the practice of preventing errors and security vulnerabilities in software, such as type errors, memory errors, thread errors, and bounds errors. It also includes initiatives to ensure that software is safe to use and meets certain standards. -

> Query: What are the key points made in this article?

The key points made in this article are that the view taken by government regulation bodies is to use memory safe languages, the timeline of guidelines published by the European Union, the UK government, and USA government reveals an increasing focus on software safety, and Data Intellect's data driven strategy is to inform its clients of potential challenges ahead, clarify the nature of those challenges, address them and eventually turn them into opportunities. -

> Query: What is the publication date, author and company who published this article?

This article was published by Data Intellect in 2023. The author is not specified.

Discussion

User data versus GPT standard models

In this instance, the scope of the LLM knowledge is limited to the input data, resulting in pros and cons:

- The model is incapable of answering questions outside of this limited scope. This potentially reduces the possibility of model hallucinations, or irrelevant answers.

- The Langchain API can display the file name of its sources, which contrasts with the online GPT models.

- If the model cannot find the information, it will simply answer “I do not know”.

It is also possible to combine user data with the standard GPT LLM by specifying a llmm argument to the index query.

Opportunities enabled by this technology

The possibility to train GPT language models with user provided data offers a wide range of possibilities. For instance, a company could train a GPT model with the entire textual content of its content management system, and thus interrogate the content in a much more productive manner than simple textual searches. During the test phase, the script notably learned a 200 page book on property investments in roughly 30 seconds.

It could also be used as tool for data analysis purposes. For instance, the Datasette project, a Sqlite based data analytics tool, has developed a ChatGPT plugin to query data tables using conversational English natural language. A similar strategy could potentially be applied to KDB time series data.

For the sake of simplicity, those features are not exposed here, but it is very easy to:

- Turn it into a chatbot rather than running queries.

- Add knowledge incrementally to LLM through a conversation.

Share this: