An obituary for Time Series Data?

Gary Davies

In the world of Time Series Data we focus on the live capture of the system and then saving that data to be used historically. Once the data is persisted to disk, especially with kdb+, it just becomes part of the platform we maintain, occasionally backfilled, occasionally modified, very rarely removed.

The piece that we rarely discuss, unless budgets require, is the cost of that data. When we do, in my experience, it’s usually around the actual storage itself and how we can potentially move to “lesser grade” storage that costs a little less.

What we tend to avoid is addressing two bigger questions, that really we should:

- What data can I delete?

- What is my data-on-disk strategy?

The first question as a simple answer and then a more complicated one; the simple, none of it, well not for N years because of audit requirements, the complicated, it depends on the clients of the data. Funnily enough, this should be the same answer as the second question.

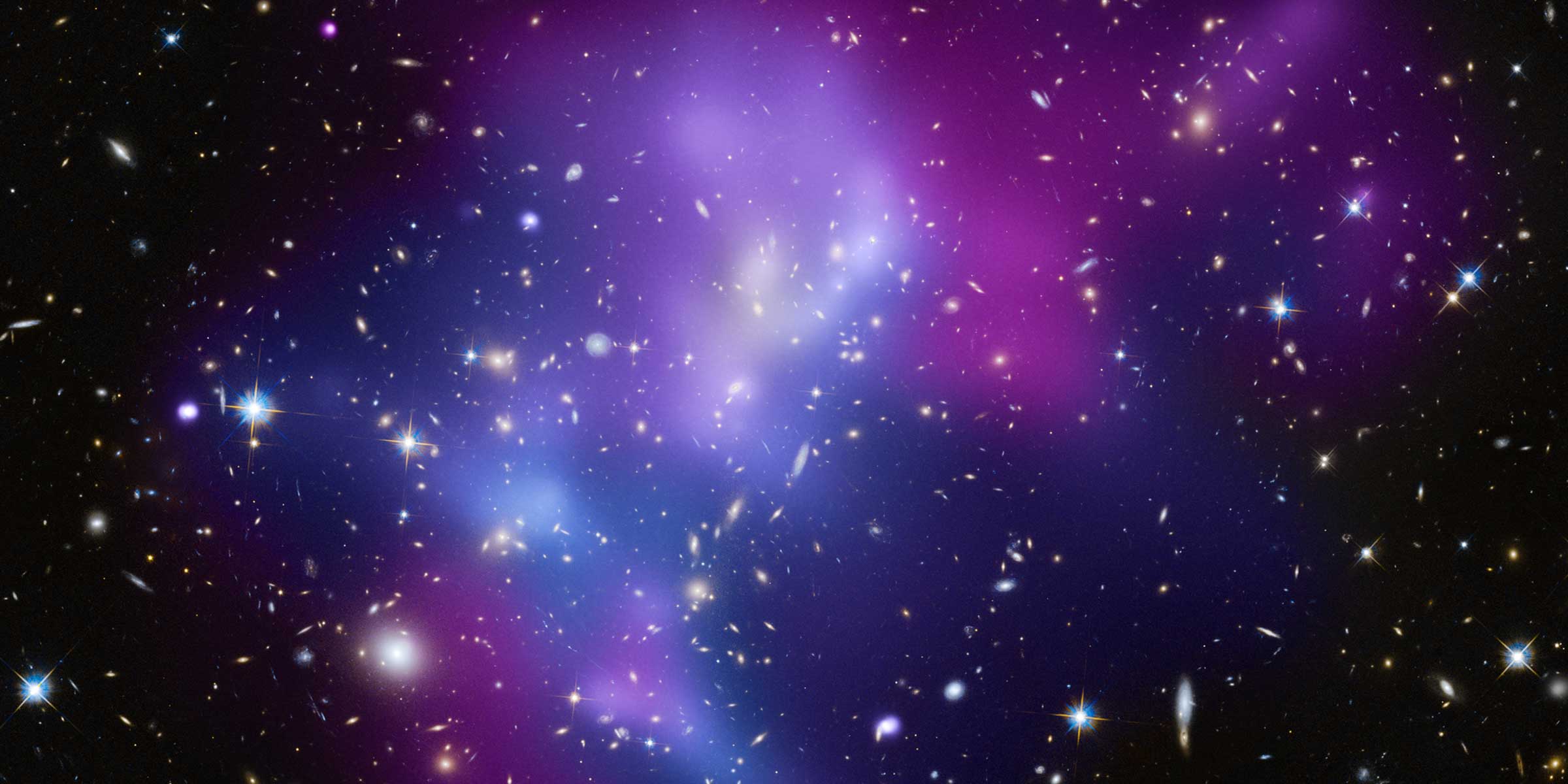

Often, especially with the rise of cloud storage, we term data hot, warm and cold depending in how often it is used. This qualitative categorization is good but it doesn’t include the most critical data type – dead data. Dead data is data that is not used and no longer relevant. If we think on the high level purpose of Time Series Data it’s about capturing data that changes with time allowing using the raw and derived data to make analyze or make decisions. Those decisions either directly or indirectly make money for our companies. With that in mind, dead data doesn’t make us any money, yet it costs us to keep it – it has become a loss making dataset.

With that in mind we should define our data-on-disk strategies considering the likelihood of how profitable our data can be:

- Hot data: regularly used by end users to aid in decision making. It’s making money for us. Could be SSD/Good quality SAN

- Warm data: It’s still fairly regularly used, usually for bigger data analysis e.g YTD, YOY. Could be lower quality SAN, Object Storage (depending on performance requirements)

- Cold Data: Less relevant and used adhoc, could be loaded only when needed or stored in the cloud.

There is no mention of dead data in the above as it should be deleted and we should have processes in place to do that. If we find there is too much warm/cold data we don’t need to just demote them to dead, we could actually downsample the data reducing it in terms of rows/columns/precision in a way that it does not impact the overall decision making.

In order to do all of this we need 2 things:

- An understanding of the needs of the clients of the data. This can be through discussions, agreements on SLAs or even proved out with query capture

- An understanding of the costings of the options available for storage.

From this we can then draft up a data strategy and implement a solution that takes data through the qualitative characteristics and most importantly does the rm -rf on the end of it.

The key takeaway from this blog is for everyone to look around for data that might be dead, check it’s pulse (by looking at query logs, speaking to folks) having the doctor sign the death cert (audit/compliance/quants sign off), send that data to the big unix box in the sky (NOT the cloud) and to answer the blog title, yes lets write an obituary for our dead time series data.

If we all did this, how much money could we save?

Share this: