Matt Doherty

Benchmarking LLMs

LLMs have already been a game changer for software engineers, just look at the usage of Stack Overflow since 2022. Now we’re beginning to get fully autonomous coding agents to do even more of our job for us! What a time to be a (just about still employed) software engineer. Having said all this, I think I can speak for most kdb+ people in saying that they still have a ways to go in our small niche. At least when compared to their performance for bigger languages like Python, Java, C++, or Javascript. This is likely just a function of the size of the training set: A quick search on stack overflow for Python returns over 2 million questions, where kdb+ only returns 2 thousand. Others have also suggested the models find right-to-left evaluation tricky, resulting in lower performance overall. However, within that general impression that LLMs are not that great at kdb+, which models are better? How much better have the models got over time? We feel these questions are worth answering.

A wide range of AI benchmarks exist and are collated on sites like epoch.ai. Benchmarks there include:

- SimpleBench, a test of everyday reasoning questions most humans can answer

- GeoBench tests if models can identify the location of a given photo

- GPQADiamond tests a range of “google proof” questions in physics, chemistry and biology

- SWE-bench, tests the models ability to solve real world software problems

- And many more…

These are all fairly specialized tests of LLMs capabilities, what we’re interested in here is a simple equivalent for kdb+. Nothing too complex, just something similar to the questions you might be asked in a kdb+ interview, or typical day-to-day questions a software engineer might need to answer. Some easy, and some hard. The goal is to assess how well each model does in correctly answering each question and summarize as a single score. From this, recommendations can be given on which models to trust and which models to avoid, and we can get a sense of how models are improving over time.

Therefore, the two main things we want from our benchmark are:

- The score varies significantly from the best to worst models

- There is still room to improve for the best models

KDBench

There’s obviously no one right answer for how to do this, but we iterated on these requirements and here is the set we came up with. Full credit to Salim We have 50 questions:

- 33 Easy – superficial kdb+ concepts, where the output required is a brief response for conceptual questions or one-line of q script for code-based questions. For example “Using kdb+/q – create a list of 10 random numbers from 10 to 20”

- 14 Medium – more focused kdb+ concepts, where the output required is a multi-sentence response for conceptual concepts or a few lines of q script for code-based questions. For example “Outline what .Q.dpft does and each parameter it takes”

- 3 Hard – complex kdb+ concepts, where the output required is a detailed response to conceptual questions or a multi-functional q script on a complex code-based question. For example “Using kdb+/q, create the Pascal’s Triangle solution to terminate when a value of greater than 100 is reached in the triangle”

A total of 134 points are awarded to the models based on the answers to these questions. The scoring of each question is based on the various components required in the final answer. While most questions have a max point system of 2 or 3, there are some questions that can have a total score of up to 9.

We used OpenRouter and a simple bash script to automate asking the models the questions and collecting responses. Marking the questions still requires a human in the loop unfortunately (again thanks Salim!). Perhaps in a future iteration we could have LLMs mark the answers too, closing the loop.

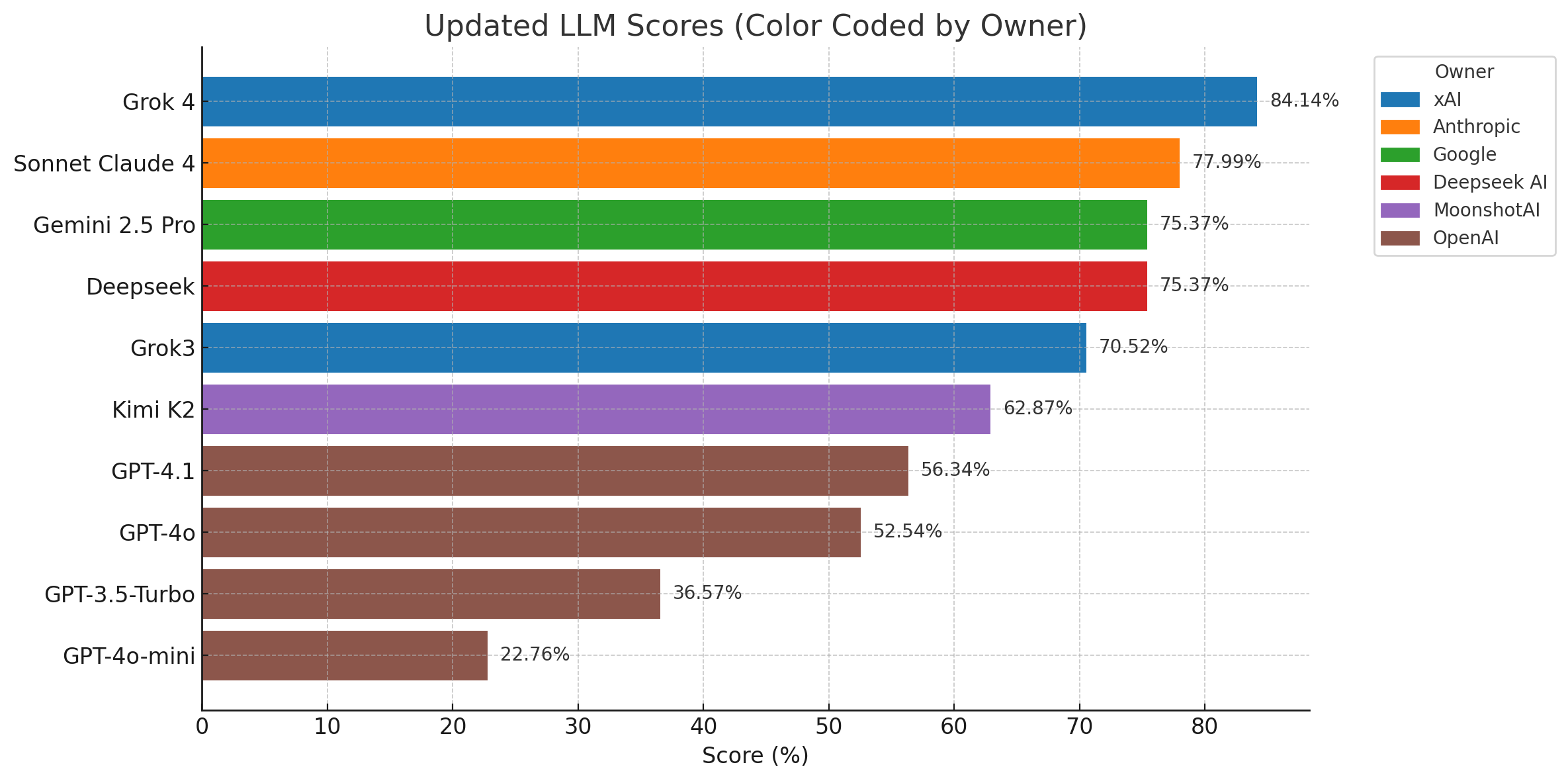

We tried the following 10 models, some cutting edge, and some older or cheaper, and here are the results we got in order from best to worst:

Benchmark Results

I don’t think the results here will come as a massive surprise to anyone, but there are some interesting details worth drawing attention to. It’s clear that the recent flagship models Grok, Claude and Gemini are the best, while the older and cheaper models perform significantly worse. The OpenAI models are shown to perform at the bottom tier with the original GPT 3.5 (released November 2022) and GPT-4o-mini (released July 2024) models answering more than half the questions incorrectly. The latest GPT 4.1 model (released in April 2025) shows about a 20% improvement compared to GPT 3.5. So, things have got a lot better with each release! If you tried GPT 3.5 or an early version of 4 and decided it was rubbish, now might be a good time to try the latest models again to see how far they’ve come.

In general, conceptual questions scored higher than raw q coding questions. Coding questions had much more unpredictable results, with many of the models confidently providing overly complex error prone q script. A general theme in the response to coding questions is a set of potential solutions where one of them might work, followed by optimization suggestions that were hit/miss in terms of accuracy. Models would sometimes incorrectly interpret the coding question, resulting in trying to solve something else. The mix of the correct results with irrelevant, useless, or incorrect information was a common factor. The scoring was really focused on if the model had the correct results, if the scoring system was stricter the models would lose points for this. Conceptual questions were much more uniform between the models where it was much easier to quickly see if the model accurately answered the question.

Here’s two questions (an easy one and a hard one) from our benchmark set, to illustrate some differences between typical model responses:

// What are the 3 commands in kdb+/q that can remove leading and/or trailing white/empty spaces from strings (easy - 3 points)

// *** Sonnet Claude 4: (3/3) - [ltrim, rtrim, trim]

// *** Gemini 2.5 Pro (3/3) - [ltrim, rtrim, trim]

// *** Grok3: (3/3) - [ltrim, rtrim, trim]

// *** Deepseek: (3/3) - [ltrim, rtrim, trim]

// *** GPT-4o-mini: (1/3) - [trim, using each on multiple strings]

// *** GPT-4.1 (3/3) - [ltrim, rtrim, trim]

// *** GPT-3.5-Turbo (1/3) -[trim, trimr (doesn't exist), using sv (incorrect example)]

// *** Grok 4 : (3/3) - correct [trim, ltrim, rtrim]

// *** Kimi K2: (3/3) - correct [trim, ltrim, rtrim]

// *** GPT-4o: (1/3) - incorrect [ssr, trim, sv]// Using kdb+/q - Find the largest palindrome that can be made from the product of two 3-digit numbers (hard - 2 points)

// *** Grok3: (2/2) - correct

// Function to check if a number is a palindrome

isPalindrome:{x~reverse x}

// Convert number to string to check if it's a palindrome

palindromeCheck:{isPalindrome string x}

// Generate all products of two 3-digit numbers (100-999)

products:{raze x*/:x} 100+til 900

// Find all palindromic products

palindromes:products where palindromeCheck each products

// Get the largest palindrome

largestPalindrome:max palindromes

// Find the pairs of 3-digit numbers that multiply to the largest palindrome

pairs:{(x,y) where x*y=largestPalindrome} . (100+til 900;100+til 900)

// Display results

show "Largest palindrome: ",string largestPalindrome

show "Pairs that multiply to it: ",string pairs

// *** Deepseek: (2/2) - correct

a:100+til 900; / Generate 3-digit numbers (100 to 999)

prod: raze a */: a; / Compute all products and flatten into a single list

s: string prod; / Convert each product to a string

pal: s ~' reverse each s; / Check if each string is a palindrome

res: max prod where pal; / Find the maximum product that is a palindrome

res / Return the result

// *** GPT-3.5-Turbo (0.5/2) - type error, did make a list to filter through but were not all products of 3 digit values

isPalindrome:{reverse x~x};

largestPalindrome:0;

range:reverse til 100 * 100;

{maxPalindrome:{largestPalindrome|max x where isPalindrome x}each x}range;

largestPalindromeConclusion

For the easier question the modern models are all good, while the older and smaller models miss some answers. For the hard question Grok and Deepseek both provide fairly solid, even reasonably idiomatic kdb+ code, that works and is well commented (Grok goes slightly beyond what the question asks for and makes a small mistake in the last line.) Claude and Gemini did not do so well for this question with their code throwing errors on the first attempt. The older GPT 3.5 has an attempt, but also does not succeed. Of course the new models also still make mistakes, but they hit much more often than they miss for the questions in our set.

So the main takeaway here is that the cutting edge models are probably better than you think at kdb+ now – as long as you stay away from OpenAI – and getting better all the time. It seems reasonable to guess that in another 2 years they’ll get a lot better again. It’s also clear that it’s probably worth asking more than one model, as which is best varies somewhat from question to question. Gemini 2.5 pro, Claude Sonnet 4, Deepseek R1, and Grok 3 currently have a free personal tier (Grok 4 is not free). The most recent models also have fairly recent training cut-offs: Grok, Claude and Gemini all know about features released in kdb 4.1. We’ll periodically come back and repeat this benchmark to see how these models improve over time.

Share this: