Matt Doherty

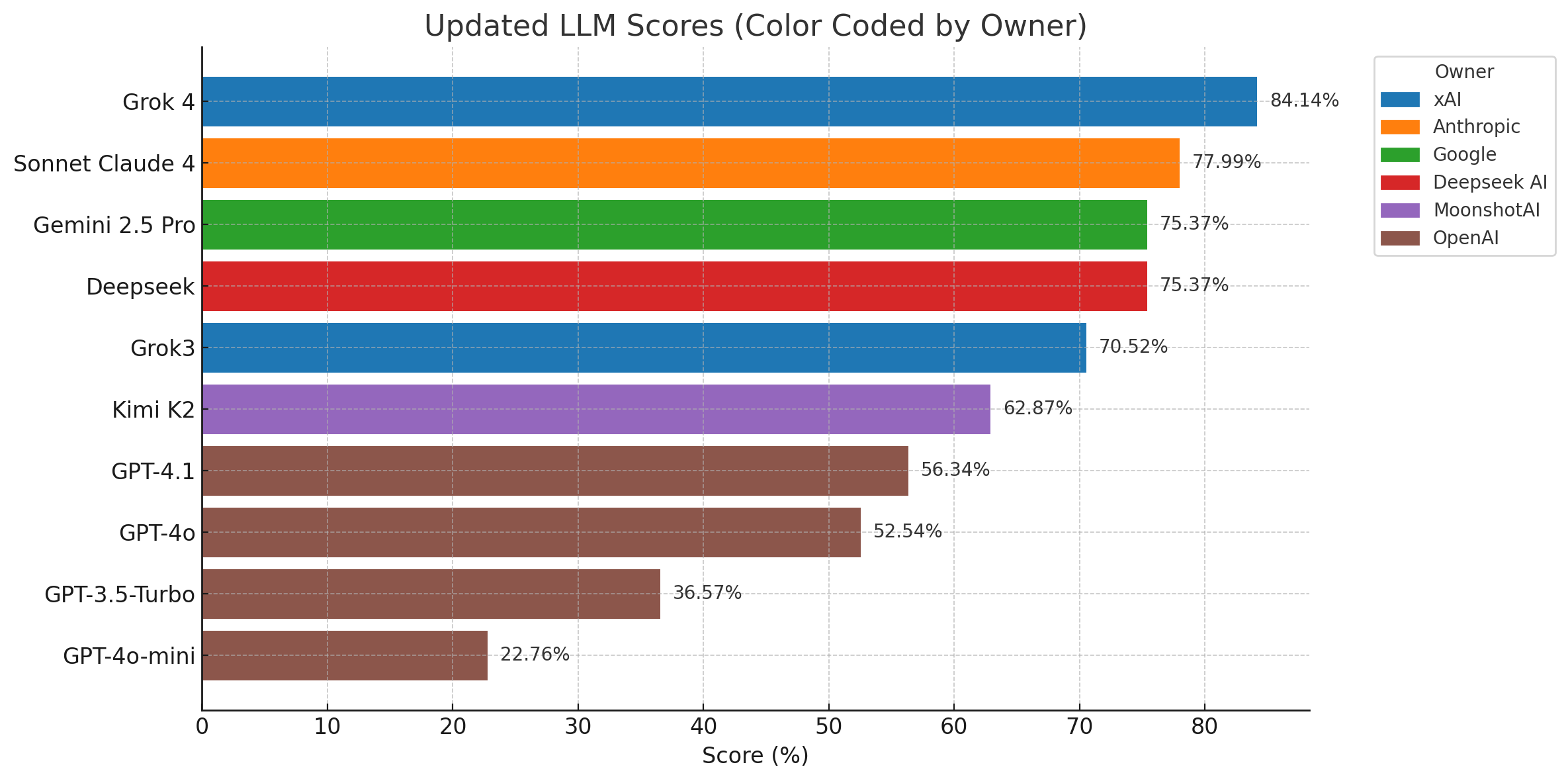

Since the turn of the year we’ve noticed a substantial uptick in the ability of the frontier models to write q code. We published a benchmark last summer which showcased some of the abilities at the time, but as with everything else in AI the landscape evolves rapidly, and it feels like we’ve experienced another step change.

The step change can be hard to define empirically, but from an “experience” point of view in the case of q coding, we see this in two ways:

- the models get the code “more right” first time. This step up is seen in advances in other programming languages too.

- the code looks much more like something an experienced q developer would write. Historically we’ve seen a lot of Python/Java looking q code, which doesn’t take full advantage of the a vectorised approach which makes q sing.

We haven’t run the full benchmark suite, as most of our team tend towards Claude and Opus 4.6. On our benchmark, Opus 4.6 came out at 90%, beating the previous best of Grok 4 at 84%.

We decided to also point Claude at Project Euler and asked it to have a crack at the first 10 questions, along with the 50th question. It took 7 minutes to do all 10, with the majority of the functions produced running in sub millisecond times. The 50th question is a few difficulty grades higher, it got that in 30 seconds. Also as with everything GenAI, the results can differ each time- we tried it with the 50th question a few different times, and on one of the runs it required considerable redirection.

With Claude, the setup context is key. We asked it to keep two pages in its context window: the q Idioms page, and the iterators page (it’s great to know that even Claude gets confused with its scans and overs). It produced some very good looking q code, something that we’d be pleased to see from someone at a mid or senior developer level. Idiomatic, No Stinking Loops.

The code repo with full CLAUDE.md is here.

It’s worth pointing out that Project Euler is a well known set of programming challenges, and will be well represented in Claude’s training set. It’s not really “solving the problems”, but it is however writing the q code, which is less likely to be in the training set.

The models continue to improve and q is less exceptional than it was. For some of our team who have access to full tooling and enough tokens, the experience has moved from assistance on well defined sub problems, to full codebase delegation and orchestration.

Share this: