The Role of Databricks in Trader Surveillance

Jonny Press

Our Surveillance practice has been tracking developments in trader surveillance technology, particularly as firms grapple with mounting regulatory pressures, surging data volumes, and the practical limitations of their existing surveillance infrastructure. A recent report from 1LOD highlight just how acute these challenges have become across the industry.

After reviewing their latest research, we see an opportunity for Databricks to address several critical gaps in current surveillance architectures:

- bringing together structured and unstructured data for advanced analytics

- empowering investigators to leverage AI tooling on the combined dataset

- empowering less technical analysts

- a strong governance model

- future proofing for both tooling and scalability

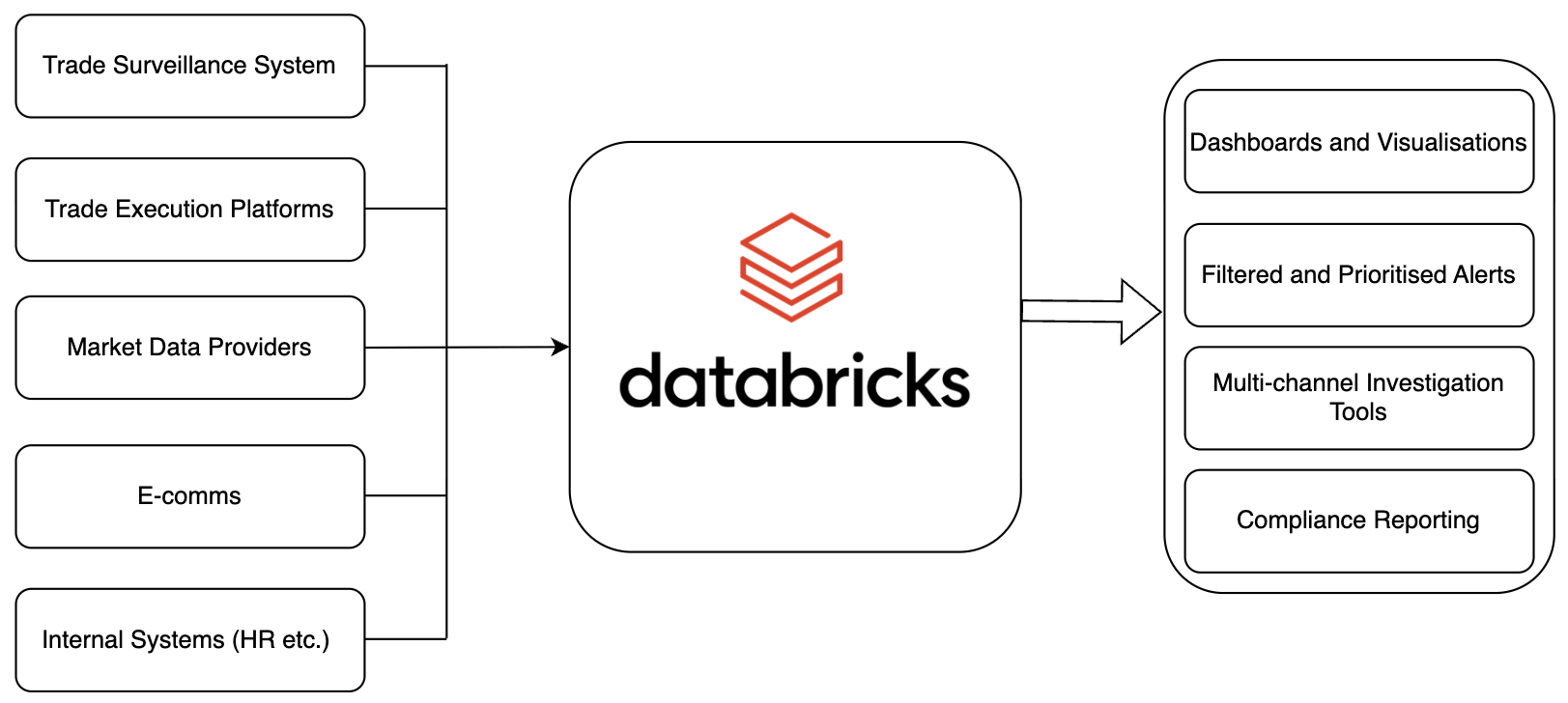

Most large firms run surveillance through a collection of specialized vendors: one or more systems for trade surveillance, another for communications surveillance, often accompanied by separate system for case management and reference data. While you could theoretically rebuild each of these in Databricks from scratch, that’s neither practical nor advisable. Instead, we recommend using Databricks to enhance what’s already in place. This may require some data loading into Databricks, or could lean on Lakehouse Federation for accessing data directly in the existing system.

The key features of Databricks which come to the fore are:

- Lakehouse architecture for data centralisation

- MLflow to mange the machine learning lifecycle

- MosaicAI to provide generative AI tooling, including LLMs

- AI/BI Genie for data investigations

- Unity Catalog for data and model lineage and governance

Centralising Surveillance Data

The typical surveillance setup scatters critical data across multiple systems: market data, orders and executions live in one database, chat and email archives in another, employee reference data somewhere else. Databricks’ Delta Lake architecture lets you bring these together, with handling for both structured and unstructured data, while Unity Catalog detects ingestion gaps, ensuring completeness.

This consolidation enables the kind of cross-channel analysis that regulators increasingly expect. Instead of investigating a suspicious trade in isolation, analysts can examine related communications, identify connected accounts, and spot coordination patterns that span multiple markets or asset classes.

Unified data also opens up several analytical and AI capabilities that are difficult or impossible with siloed systems:

- Behavioral baselines that incorporate both trading patterns and communication styles

- Cross-market manipulation detection that correlates activity across venues and time zones

- Combining data across internal and external comms channels, such as social media or public chatrooms

- Investigation workflows where analysts can pivot between data sets (trade, comms, internal) without without switching systems

- Machine learning models trained on complete datasets rather than fragmented samples

Applying AI More Strategically

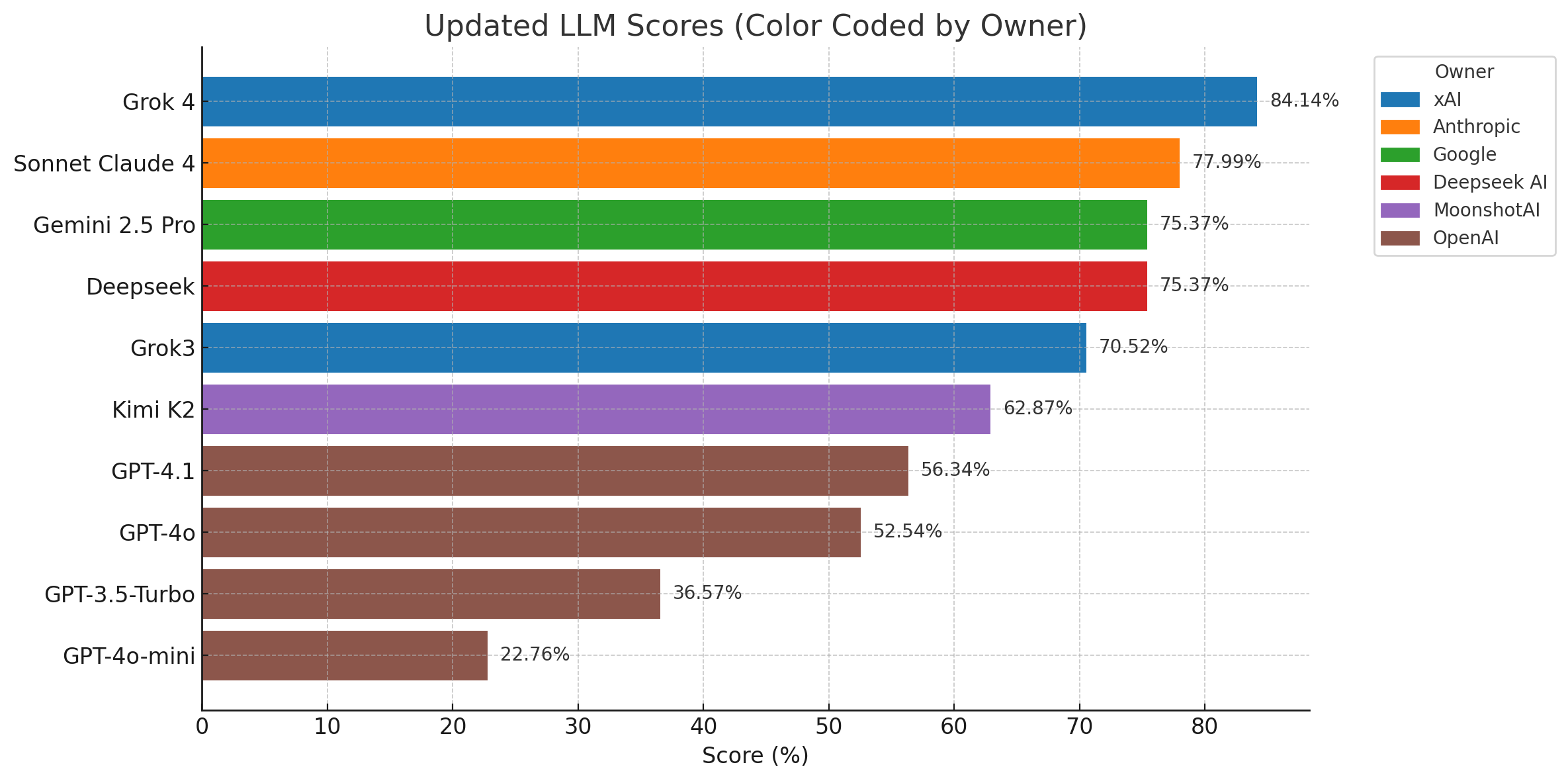

Most surveillance vendors now claim AI capabilities, but the implementations are often disappointing. Basic keyword matching gets rebranded as “natural language processing” or simple statistical outliers get labelled as “machine learning.” Databricks offers more sophisticated options, though the key is applying them to specific surveillance problems rather than chasing the latest AI trends.

False positive reduction is a good starting example. Traditional rule-based systems flag anything that meets predetermined criteria, generating alerts that turn out to be routine trading activity. Surveillance teams can, for example, use MLflow to train a classification model that scores alerts on their likelihood of being false positives. Using historical alert data labelled by analysts (true issue vs. false alarm), the model could be trained and deployed via MLflow Model Serving in Databricks, so that incoming alerts are automatically given a “false-positive probability” score in advance of reaching the surveillance analyst team. These same techniques can also be used to fine tune the alert parameters to reduce the occurrence of false probabilties in the first instance.

For communications monitoring, the technology matches the regulatory expectations. Rather than sampling subsets of messages or relying on keyword searches that miss context and slang, natural language models can process complete communication archives, identifying sentiment shifts, coded language, and discussion threads that span multiple platforms and participants. For instance, if Trader X is flagged for a big trade, an NLP search can be run on-the-fly over Trader X’s messages in the prior week for any talk of that asset or any counterparties involved. Databricks’ MosaicAI and LakehouseIQ NLP tooling mean analysts can do this within minutes during an investigation.

Pattern detection presents another practical application. Most surveillance systems excel at identifying individual rule violations but miss coordinated behaviours. AI models can spot correlated trading across different accounts, synchronized communication spikes before market-moving events, or gradual position accumulation designed to avoid traditional surveillance triggers. Libraries like GraphFrames allow analysis of networks of relationships at scale, enabling surveillance teams to reveal clusters of related actors with coordinated activity that only graph and network analytics techniques can decipher.

Supporting Investigation Teams

Surveillance software tools provide investigation interfaces designed to enable the analyst to make decisions on the alerts that have been generated. At times though surveillance analysts may need to dig deeper or explore connections the software wasn’t designed to surface.

Databricks addresses this through analyst-friendly data access via AI/BI Genie. Investigation teams can create their own analytical environments without waiting for IT resources or vendor customisations. For less technical staff, natural language querying means they can ask direct questions like “show me trader Jane’s activity around earnings announcements”, “show me any unusually large trades in energy futures by trader Jim in the last 7 days”, or “show me an other large trades by other traders in that market or produced within 1 hour of Jane’s suspicious activity” rather than grappling with a query language or submitting a help desk ticket. In practice, this means a surveillance analyst can investigate a hunch or drill into an alert trend just by asking follow-up questions in natural language.

There is a large dividend to be found in feeding a rich dataset into Databricks for adhoc investigations and exploration.

Governance and Completeness

Surveillance teams function as data stewards, responsible for ensuring their information is complete, accurate, and audit-ready. Most firms struggle with basic questions like “where did this alert data come from?” or “how do we know we’re capturing all relevant communications?”

Unity Catalog provides the kind of data governance that surveillance operations require: centralised access controls, lineage tracking, audit trails that satisfy regulatory examinations, and fine grained role based permissioning to control who has access to view or modify data and models.

When AI models influence surveillance decisions, MLflow ensures those models are explainable, reproducible, and documented- critical capabilities when justifying investigative conclusions to regulators. For a given surveillance alert or report which has been generated within the Databricks environment, you can trace back which model or rule produced it, what data that model was trained on, and which raw sources fed into that data. If regulators ask “who viewed or changed this model?”, the team can easily produce the audit trail.

In practice effective data governance has other benefits too. It reduces the risk of inadvertent gaps (e.g. “we didn’t realize we weren’t ingesting that chat system’s data for 2 weeks”- lineage monitors can catch that). It also streamlines compliance with privacy laws- if certain communications need to be redacted or users’ data privacy preferences honoured, the centralised controls can enforce that globally. One notable feature is that Unity Catalog’s lineage can help identify data quality or completeness issues- if a source system fails to deliver data, the lineage graph might show a break that can alert the team. This kind of proactive monitoring helps ensure the surveillance data is “complete and accurate” as required.

Planning for the Future

Technology decisions in surveillance carry long-term consequences. Systems implemented today need to handle whatever data volumes, regulatory requirements, and analytical demands emerge over the next decade.

Databricks’ architecture scales with business needs rather than requiring upgrades when data volumes increase or new surveillance requirements appear. The platform’s development trajectory both in core platform improvements and AI tooling suggest that surveillance use cases will continue to be well catered for. Indeed, the recent addition of Spark Structured Streaming Real Time Mode extends the capability to event driven use cases.

Conclusion

This approach builds on existing surveillance infrastructure rather than replacing it entirely. The goal is to make current system and tools more effective by augmenting with the capabilities of Databricks, and to provide the flexibility for future challenges without the disruption and risk of complete technology overhauls.

Share this: